Artificial Intelligence and the Modern Lawyer: Using Powerful AI Tools Effectively and Ethically

Over the last two years, MGS has devoted substantial effort to understanding the benefits, costs and risks of incorporating artificial intelligence (AI) into our legal workflow. AI is no longer a theoretical concept for the legal profession. It is embedded in the tools lawyers use every day – legal research platforms, document management systems, billing software, email, and even word processing applications. Whether attorneys actively embrace AI or not, it is increasingly shaping how legal services are delivered. Inevitably, the effective and ethical use of AI has become part of what it means to provide competent legal representation.

AI Competence Is Now a Core Professional Responsibility for Lawyers

AI will not replace lawyers, but lawyers who do not understand how to use AI risk being replaced by those who do. This is simply a recognition that competence includes familiarity with modern tools and their limitations. The ABA has expressly tied lawyers’ duties of competence to an understanding of the benefits and risks of generative AI tools, reinforcing that attorneys must stay sufficiently informed to use these tools responsibly.

Lawyers should assume that AI is already in their practice environment. Even if a lawyer never opens a standalone generative AI platform, AI features now appear across common enterprise software and research products. And young lawyers and staff are using ChatGPT or similar tools, whether the law firm knows it or not. The professional question is not “Will we encounter AI?” but “How do we supervise it, validate it, and use it without compromising our ethical duties?”

What Generative AI Is – and Why Lawyers Must Understand Its Limits

Modern generative AI tools (including large language models) are best understood as sophisticated prediction engines: they generate plausible text based on patterns in training data and user prompts. That makes them excellent assistants for drafting, summarizing, organizing, and brainstorming. But it also explains why they can generate incorrect statements with great confidence, including “hallucinated” legal authorities – cases, quotations, or citations that appear authentic but are entirely fabricated.

That is why lawyers must treat AI as a starting point, not a conclusion. The lawyer remains responsible for verifying accuracy, analyzing the law, and exercising professional judgment.

Practical AI Use Cases for Law Firms and Legal Professionals

When used thoughtfully, AI can meaningfully improve efficiency and consistency across a range of legal tasks – particularly those that are language-heavy and process-driven.

Using AI for Legal Research and Issue Spotting. AI tools can help generate a quick orientation to an unfamiliar subject, identify questions to pursue, and summarize a set of cases or statutes. But summaries are not substitutes for reading authority, and outputs must be validated in trusted research systems.

AI-Assisted Legal Document Drafting. AI can produce first drafts of client communications, discovery requests, internal memoranda, or outlines. The value lies not in replacing lawyer drafting, but in reducing the “blank page” burden and allowing attorneys to focus on strategy, precision, and judgment.

Summarizing Voluminous Materials with AI. AI is particularly effective at condensing long documents – depositions, medical records, contracts, or discovery responses – into usable summaries. This is an ideal “start small” use case: low risk when handled correctly, high return in time saved, and easily checked against the underlying record.

AI for Timekeeping, Billing and Administrative Support. AI can assist with creating time-entry narratives, categorizing tasks by phase, and improving consistency. As always, lawyers remain responsible for ensuring that billing is accurate, reasonable, and consistent with client expectations and ethical rules.

Prompt Engineering for Lawyers: Prompts, Personas, and Agents

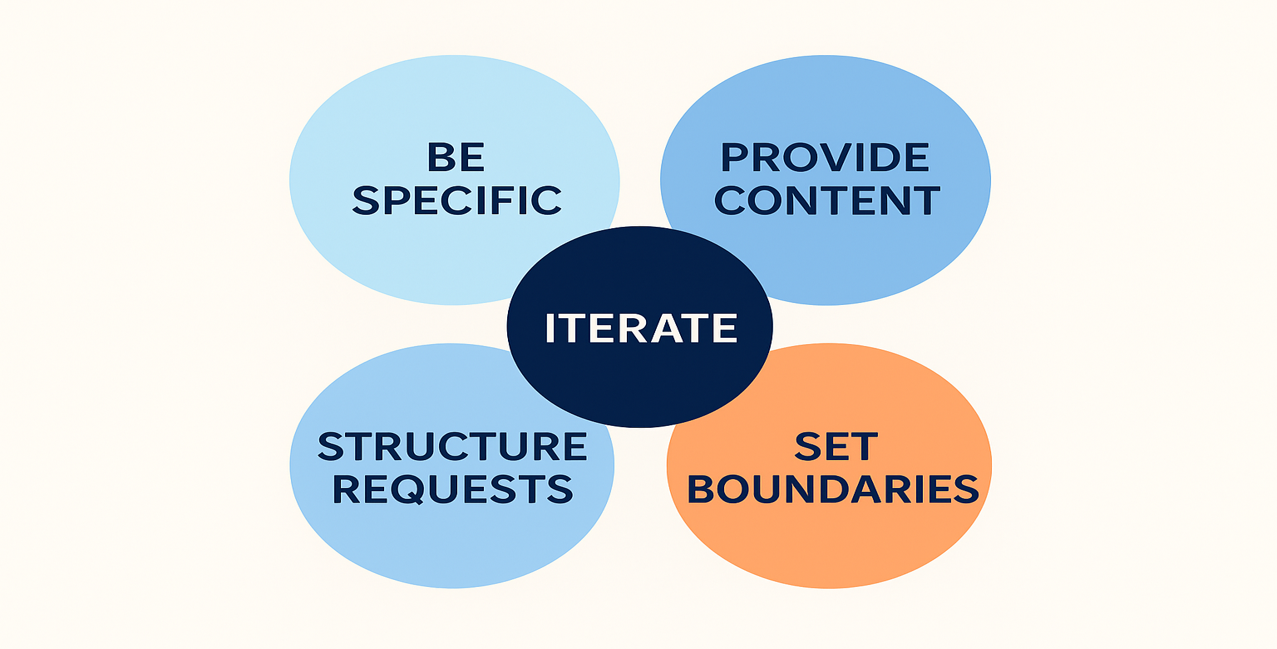

In virtually all things related to AI, output quality depends heavily on input quality. The most important new skill lawyers must develop is how to request – or “prompt” – the desired output from the AI model. Effective prompts are specific, contextual, and structured. A lawyer will get much better results if, instead of asking an AI tool to “write a legal memo,” he or she specifies the jurisdiction, the issue, the intended audience, the format, word limits, and the desired tone.

A practical framework is the “golden prompt” approach: define the action, topic, constraints, format, and tone. Treat prompting as iterative – refine outputs through follow-up questions, edits, and targeted constraints. When lawyers treat AI like a junior assistant that improves with instruction, the results become more reliable and more usable.

As lawyers master the art of prompting quality responses to individual requests, they can move on to creating specific AI agents: AI systems configured to act semi-autonomously within defined boundaries that can chain together prompts, tools, and workflows. Agents are often used for managing repetitive or structured legal tasks.

Ethical Guardrails for Lawyers Using AI

Courts and bar organizations are at the forefront of guiding the proper use of AI by lawyers. ABA Formal Opinion 512 and the ARDC’s Illinois Attorney’s Guide to Implementing AI are just two examples. The ethical framework governing AI use is not new—it rests on familiar professional duties applied to new tools.

Attorney Competence and AI Tools: Lawyers must understand what the tool can and cannot do and must apply appropriate review and verification.

Confidentiality Risks When Using AI: Lawyers must evaluate data security and avoid exposing protected information to tools or vendors without adequate safeguards (and, where necessary, client consent).

Candor to the Court and Meritorious Filings: Lawyers must ensure filings are accurate, legally tenable, and supported by real authority—not AI-generated fabrications.

Supervision of AI Use in the Law Firm Environment: AI should be treated like a nonlawyer assistant – useful but requiring oversight.

These obligations apply regardless of whether the tool is branded as a consumer chatbot, an “AI assistant” embedded in office software, or an AI feature offered through a legal vendor.

Courts Are Scrutinizing AI Misuse by Lawyers

The ethical risks of generative AI in law are no longer abstract. The most prominent early example is Mata v. Avianca, Inc., decided by the United States District Court for the Southern District of New York in June 2023.

In Mata, plaintiff’s counsel submitted a filing that included multiple non-existent judicial opinions – complete with fabricated quotations and citations – generated by ChatGPT. When opposing counsel and the court were unable to locate the cited authorities, the court required counsel to produce copies. Counsel ultimately submitted purported “opinions” that were also fabricated and continued to stand by the citations even after the problem was brought to their attention.

The court’s sanctions order is notable for two reasons. First, it expressly recognized that “there is nothing inherently improper about using a reliable artificial intelligence tool for assistance.” Second – and more important – it underscored that existing rules impose a gatekeeping role on attorneys to ensure the accuracy of what they file. The court imposed sanctions, including a monetary penalty, emphasizing that lawyers cannot outsource their duty of reasonable inquiry and candor to a generative AI system.

Mata quickly became a reference point for the profession because it illustrates how AI failure typically occurs: not because the technology is malicious, but because the lawyer failed to validate output that looked plausible. In that respect, the case aligns closely with the ABA’s later guidance that lawyers must understand AI’s limitations and supervise its use to avoid inaccurate submissions and client harm.

Green Building Initiative, Inc. v. Peacock is another example where a lawyer submitted a brief with a phantom case to the District Court in Oregon, but there the firm took active steps to acknowledge and rectify the lawyer’s mistake. The court declined to impose formal sanctions on the lawyer because the law firm had already implemented an AI Use Policy (along with already compensating clients for the mishap). This illustrates the importance of being competent in AI usage not only at the individual lawyer level but at the law firm level by implementing policies, protocols, and continuous training in AI usage.

Key Lessons from Court Sanctions Involving AI Use

Taken together, ethics guidance and judicial orders sanctioning AI misuse reveal a clear, consistent message:

AI is Permitted, but Blind Reliance is Not. Courts and ethics authorities have both emphasized that using AI is not inherently unethical; failing to verify AI output – especially citations and quotations – is.

Hallucinated Citations Trigger Traditional Sanctions. Judges have applied existing standards (e.g., Rule 11’s reasonable inquiry requirements and the court’s inherent authority) when lawyers submit fictitious authority generated by AI.

Supervision is the Central Issue. AI should be treated like a nonlawyer assistant: helpful for first drafts and efficiency, but requiring lawyer oversight, review, and responsibility for the final product.

These points should guide everyday practice and management decisions – what tools to approve, what training to provide, what categories of work are appropriate for AI assistance, and what validation steps must occur before anything leaves the firm.

A Checklist for Effective and Ethical AI Use in Law Firms

To harness the benefits of AI while staying inside ethical boundaries, firms should adopt some baseline guardrails:

Use AI as an Assistant, not a Decision-Maker. AI can accelerate drafting and organization, but legal conclusions must be formed – and owned – by the lawyer.

Verify Everything That Matters. Treat citations, quotes, and factual assertions as untrusted until confirmed through reliable sources. Mata is the cautionary example of what happens when plausible-looking output is not verified.

Protect Confidentiality by Design. Vet tools, understand data handling, and avoid sharing confidential or privileged information in platforms that do not provide appropriate safeguards.

Document Your Process. For significant work product, build a habit of documenting how AI was used and what validation steps were performed – especially when the work will be filed, relied upon by a client, or shared externally.

Train Lawyers and Staff. Competence is now partially technological. Training should cover prompt techniques, hallucination risk, confidentiality, and required verification workflows.

Have a Clear Firm Policy for AI Use. The policy must respect and incorporate specific limitations or prohibitions of individual clients.

For firms or lawyers new to AI, the best approach is to start small and low-risk: outlining, brainstorming, summarizing non-privileged materials, or generating internal checklists. Compare outputs, refine prompts, and develop a repeatable validation workflow before using AI for anything that will be filed or communicated externally.

Choosing AI Tools: Security, Privacy, and Confidentiality Considerations

Be aware that AI tools do not all operate in like manner. Some (like LexisAI) are closed and pull only from a defined database. Some (like ChatGPT) are open and pull from anywhere on the Internet. Some are secure in that they do not retain any of the information the user inputs, while others use the user’s data to train the language and learning models, potentially lessening the confidentiality of user information. When in doubt, start by prompting the AI tool to describe its security and privacy features and link to sources, and use iterative prompts to create a full privacy and security profile for the tool.

Most importantly, approach AI as a “co-pilot” – a capable junior assistant that can improve speed and consistency, but still requires supervision, verification, and professional judgment at every stage.

Conclusion: Mastery of AI Use Is a Differentiator in the Modern Legal Arena

Artificial intelligence is a powerful addition to modern law practice, but it is not a shortcut around ethics, diligence, or responsibility. Used thoughtfully, it can save time, improve consistency, and enhance client service. Used carelessly, it can undermine credibility, expose confidential information, and lead to sanctions causing immeasurable reputational harm.

The lawyers and firms that will thrive are not those who avoid AI, but those who understand it: its strengths, its limits, and the ethical obligations that remain constant. Competence today means being conversant with AI, using it deliberately, and remembering that the lawyer – not the tool – remains accountable.